Monitoring MySQL backups

Backups are only worth something if they run successfully. We'll set up daily MySQL backups (uploading to AWS S3) and configure alerts monitoring the backup size, duration, and errors using AnomalyAlert.

- Prerequisites

- Backing up MySQL and uploading backups

- Monitoring the backups

- Alerts for when backups don't run or fail

Prerequisites

This assumes that we already have a S3 bucket created and have the credentials (access key and access secret) to access it.

Install and configure AWS CLI

sudo apt update && sudo apt install awscli -yNote: This command is specific to systems that use the apt package manager, like Ubuntu and other Debian-based distributions. If you're using a different distribution, the command to install awscli may vary.

Now let's configure the AWS CLI with our credentials. We'll run

aws configureEnter the Access Key ID and Access Key Secret.

Backing up MySQL and uploading backups

Backing up

We'll create backups, database-by-database, and upload them to S3 object storage. Having the current time and the database name in the backup filename will make it easier to find the one we need later on.

Create a shell script calledbackupdb.sh: #!/bin/bash

# Credentials

MYSQL_USER="your_username"

MYSQL_PASSWORD="your_password"

# List of database names to backup

DATABASES=("blog" "store")

for DB in "${DATABASES[@]}"; do

TIMESTAMP=$(date "+%Y%m%d%H%M")

BACKUP_FILE="/tmp/${TIMESTAMP}-${DB}.sql.gz"

mysqldump -u $MYSQL_USER -p$MYSQL_PASSWORD $DB | gzip > $BACKUP_FILE

aws s3 cp $BACKUP_FILE $S3_BUCKET

# Remove the local backup file

rm -f $BACKUP_FILE

done This will loop through the list of database names we defined and back each one up to a compressed .sql.gz file in /tmp, then upload that file to S3 and remove the local copy.

Make the script executable using chmod +x backupdb.sh and run it by invoking ./backupdb.sh. Running it should show output of awscli uploading the backup files to S3 - and they should show up in the S3 bucket.

Scheduling daily backups

Let's add a job that runs our script daily — open crontab for editing by running crontab -e and add the following line:

0 0 * * * /home/ubuntu/backupdb.sh The actual path may be different on your system — you can check the current path by running pwd.

Monitoring the backups

While our backup process is now automated to run at regular intervals, we still lack a system for detecting failures. Such issues could arise from a variety of unexpected problems.

We'll update our script to capture a few metrics and send them to AnomalyAlert.

Here's the final script:

#!/bin/bash

# Your AnomalyAlert API key

API_KEY="YOUR_API_KEY_HERE"

# Prefix to use in monitor names (your-prefix/database-name)

MONITOR_PREFIX="db-backups/"

# Credentials

MYSQL_USER="your_username"

MYSQL_PASSWORD="your_password"

# List of database names to back up

DATABASES=("blog" "store")

# S3 bucket where backups will be uploaded

S3_BUCKET="s3://your-s3-bucket-name/path/"

for DB in "${DATABASES[@]}"; do

TIMESTAMP=$(date "+%Y%m%d%H%M")

BACKUP_FILE="/tmp/${TIMESTAMP}-${DB}.sql.gz"

BACKUP_SIZE=0

ERRORS=0

# Start timer and backup the database

start_time=$(date +%s)

mysqldump -u $MYSQL_USER -p$MYSQL_PASSWORD $DB | gzip > $BACKUP_FILE

if [ $? -eq 0 ]; then

# Measure backup size in megabytes

BACKUP_SIZE=$(du -sk $BACKUP_FILE | awk '{printf "%.2f", $1/1024}')

# Upload to S3

aws s3 cp $BACKUP_FILE $S3_BUCKET

if [ $? -ne 0 ]; then

# S3 upload failed - set errors to one

ERRORS=1

fi

else

# Backup failed - set errors to one

ERRORS=1

fi

# End timer

end_time=$(date +%s)

DURATION=$((end_time - start_time))

# Remove the local backup file

rm -f $BACKUP_FILE

# Post values to AnomalyAlert

curl -X POST "https://io.anomalyalert.com" \

-H "Content-Type: application/json" \

-d "{ \"api_key\": \"$API_KEY\", \"monitor\": \"$MONITOR_PREFIX$DB\", \"data\": { \"duration\": $DURATION, \"size\": $BACKUP_SIZE, \"errors\": $ERRORS }}"

doneThere's a couple of new variables we need to configure:

-

API_KEY- Your AnomalyAlert API key (it can be found under Settings) -

MONITOR_PREFIX- By default the database name will also be the monitor name. This prefix can be useful for organizing them (i.e.db-backups/will result in monitor namesdb-backups/blog, etc.)

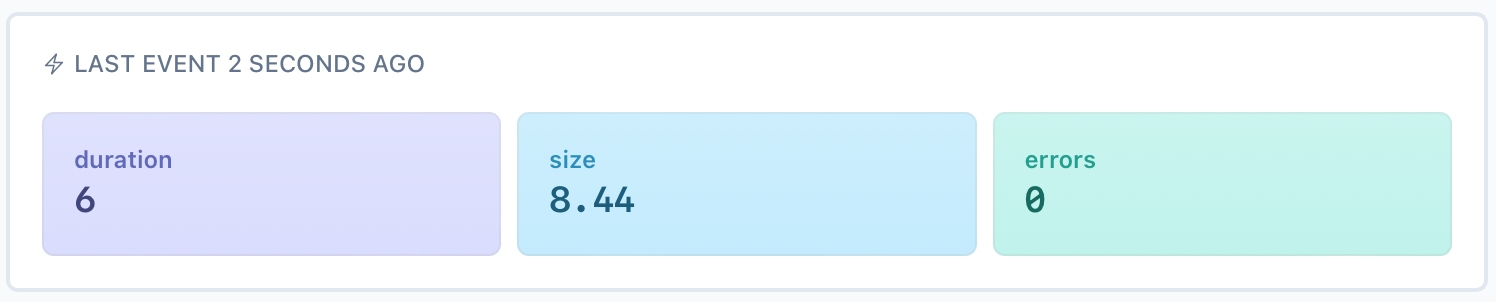

Now, whenever our backup script is triggered, we'll keep track of the following information for each database:

-

duration- amount of time, in seconds, it took to create a backup and upload it to S3 size- backup size in megabytes-

errors- whether we encountered any errors during backup (0 or 1)

Let's run the backups now (./backupdb.sh) and refresh AnomalyAlert. You should now see a monitor created for each of the databases, as well as the 3 metrics we're collecting for each of them:

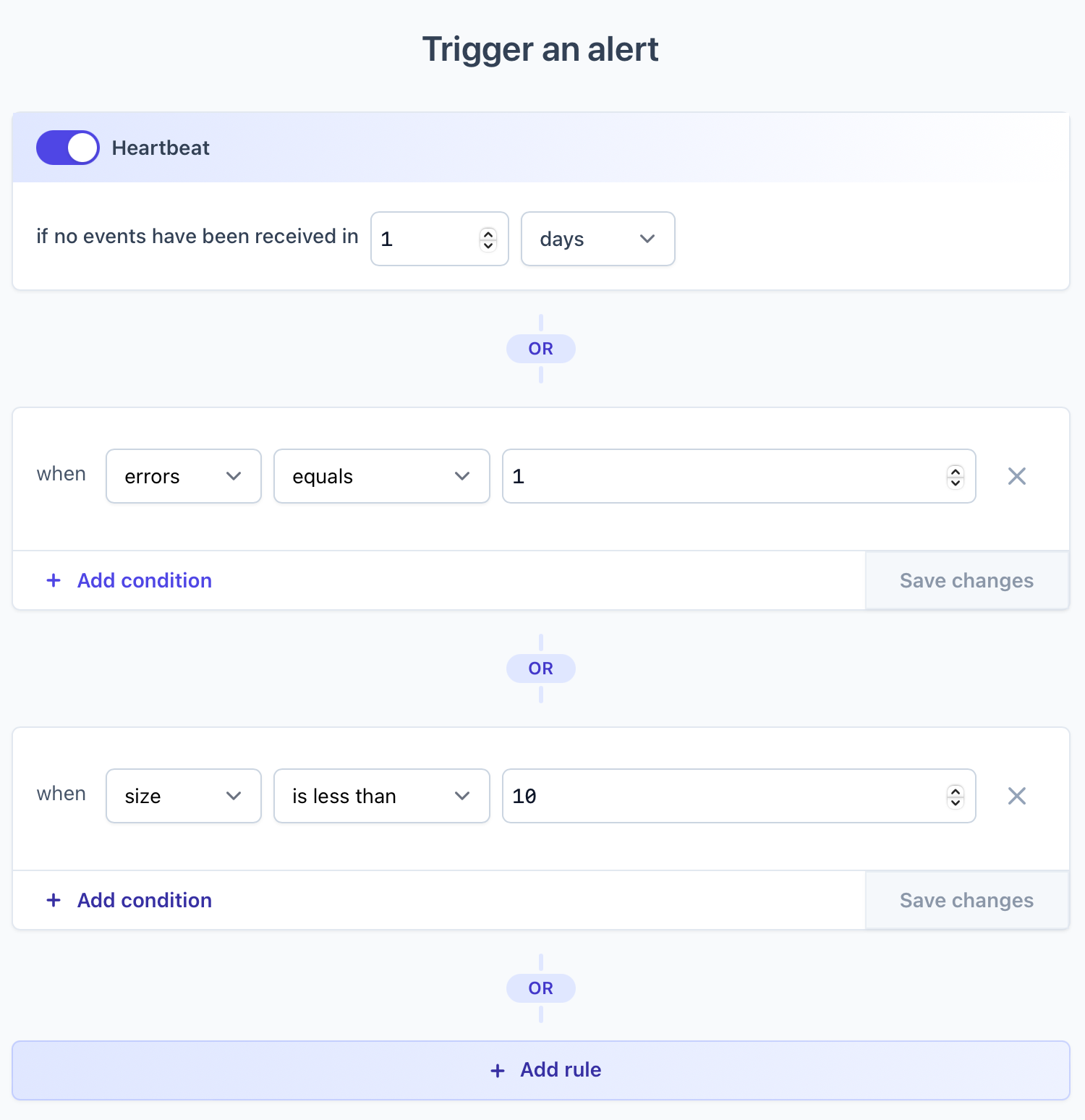

Alerts for when backups don't run or fail

With monitors and data collection in place, it's time for us to set up our alerts. Click on Configure alerts on your monitor.

We'll add 3 rules that will trigger alerts whenever either of them is violated:

- No backups have been created in more than 1 day

- There were errors during backup process

- Backup size is less than 10MB (adjust accordingly for your database size)

That's it. We can now sleep better knowing that as soon as something goes wrong with our database backups, we'll get notified!